PUBLISHED PROJECTS

VXSlate: Combining Head Movements and Mobile Touch for Large Virtual Display Interaction

Large physical displays offer big screen real estates that are suitable to perform complex problem solving tasks requiring analyzing information at a high resolution. However, large physical displays are cumbersome, often requiring a substantial physical space to accommodate them and constraining users to certain locations.

VR headsets can open opportunities for users to accomplish complex tasks on large virtual displays using compact and portable devices. However, interacting with such large virtual displays using existing interaction techniques might cause fatigue, especially for precise manipulation tasks, due to the lack of physical surfaces.

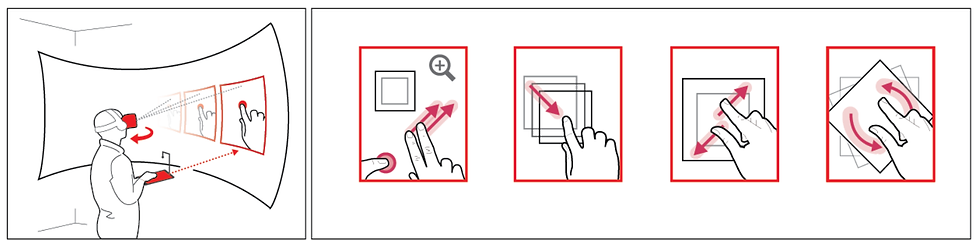

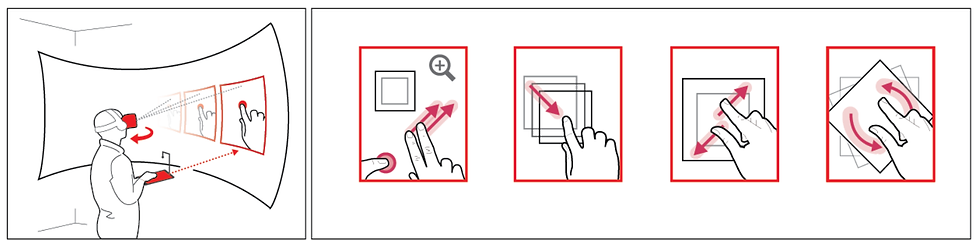

To deal with this issue, we explored the design of VXSlate (Virtually eXtendable Slate), an interaction technique that uses a large virtual display as an expansion of a tablet. We combined a user’s head movements as tracked by the VR headset, and touch interaction on the tablet. Using VXSlate, a user head movements positions a virtual representation of the tablet together with the user’s hand, on the large virtual display. This allows the user to perform fine-tuned multi-touch content manipulations. VXSlate thus offers users a novel way to manipulate contents on large virtual displays in VR while reducing users' fatigue caused by the lack of physical surfaces.

Paper is accepted at ACM DIS 2021. The pdf file of the paper will be coming soon.

Paper

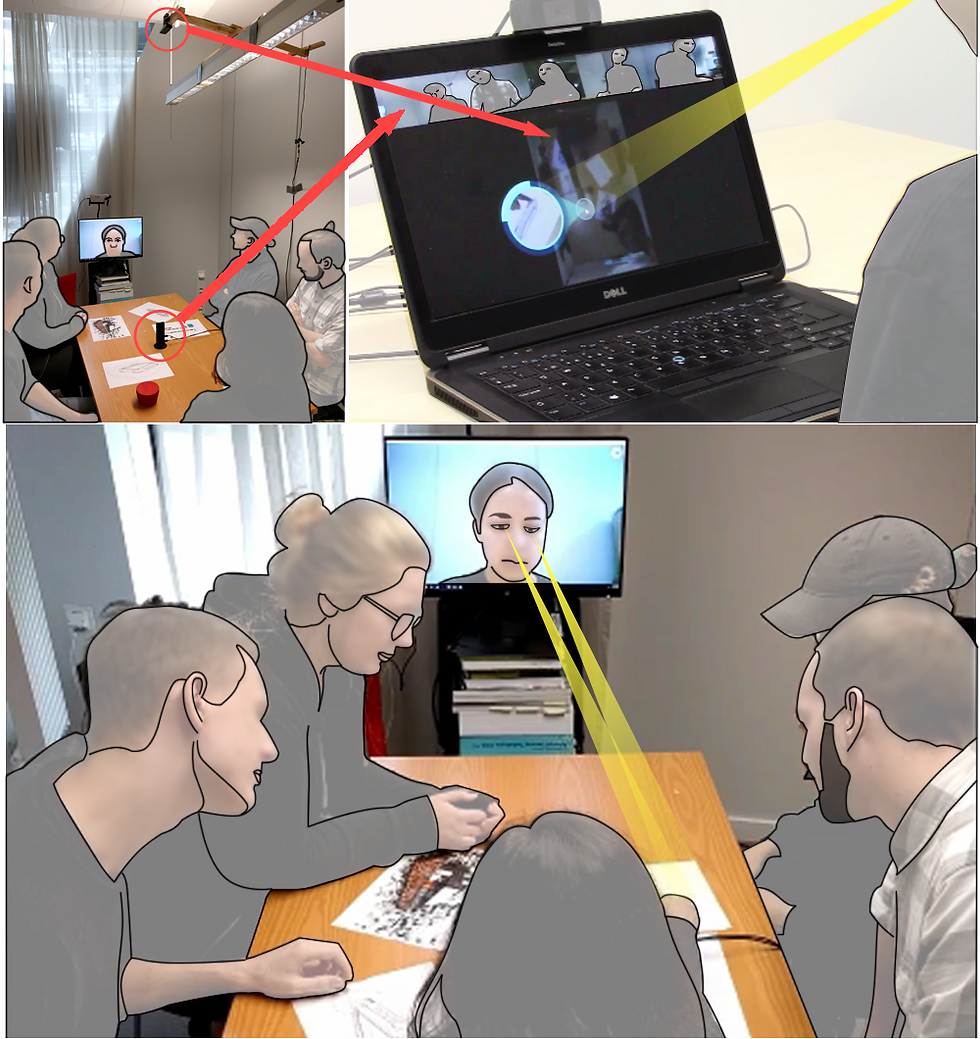

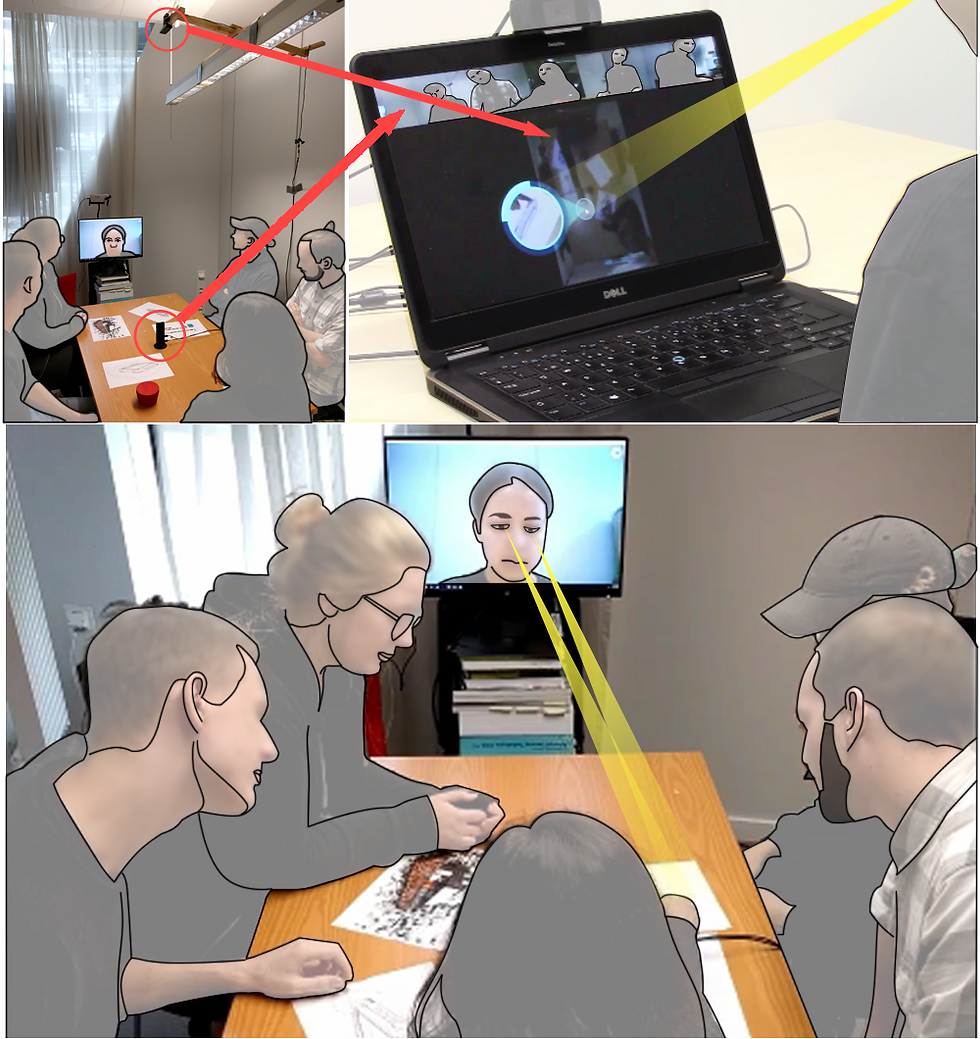

GazeLens: Guiding Attention to Improve Gaze Interpretation in Hub-Satellite Collaboration

Gaze is an important non-verbal cue in communication as it can unobtrusively convey a person's attention, reducing effort in collaboration. However in video conferencing, especially in hub-satellite settings where an individual (satellite) works with a remote team (hub) sitting around a table in a meeting room, gaze of the satellite on the hub's people and artifacts is not effectively conveyed due to the image distortion on the conventional video feed. To address this, we design a lightweight solution called GazeLens to help hub's coworkers better interpret the satellite's gaze. On the hub side, GazeLens captures a panoramic video of people sitting around a meeting table using a 360 camera and artifacts on the table using a RGB camera mounted on the ceiling. On the satellite side, these two video feeds are presented on the screen of a commodity laptop. Virtual lenses were employed to strategically guide the satellite's attention to suitable location on the screen while viewing hub's people and artifacts so that his/her gaze is better aligned with the corresponding targets on the hub side. Compared to conventional video conferencing interface, GazeLens could help hub coworkers better differentiate the satellite's gaze at hub people and artifacts and improve gaze interpretation accuracy.

Le, Khanh-Duy, Ignacio Avellino, Cédric Fleury, Morten Fjeld, and Andreas Kunz. "GazeLens: Guiding Attention to Improve Gaze Interpretation in Hub-Satellite Collaboration." In IFIP Conference on Human-Computer Interaction, pp. 282-303. Springer, Cham, 2019.

MirrorTablet: Exploring a Low-Cost Mobile System for Capturing Unmediated Hand Gestures in Remote Collaboration on Physical Tasks

This project aimed to design and explore a low-cost and minimally-instrumented solution to capture images of hand gestures of users when interacting with mobile devices (tablet, smartphone) in order to better support remote collaboration on physical tasks. We believe that the designed system can be easily in the reality for certain people such as technical expert or technical support. We also explored the effect of unmediated hand gestures captured by the designed system on remote collaboration using mobile devices.

HyperCollabSpace: Immersive Environment for Distributed Creative Collaboration

In this work, we aimed to design a system that supports a remote user to immersively participate in a collaborative session with his team, collocated in another place, for example for brainstorming or project planning. Driven by the mindset of making our system easily acquired in the reality, we only employed off-the-shelf technologies such as mobile devices, 360 lens and Microsoft Kinect. Furthermore, we also chose to visualize active users who are interacting with the screen in the meeting room as kinetic avatar on the remote user's device in order to save the internet connection's bandwidth, making it suitable for nomadic work context. The remote user can also view and interact with the remote collocated team from different point-of-view depending on their current focus.

Towards Leaning Aware Interaction with Multitouch Tabletops

Multitouch tabletops have been considered an emerging technology. However, one of the side effects in interacting with interactive tabletop is that users tend to unintentionally lean different parts of their body such as arm, hand and palm on the surface to avoid fatigue, resulting unexpected touch interaction. While previous works tend to disregard these unintentional touches, my colleagues and I explored ways to take them into account to enrich user interaction with interactive tabletops.

NowAndThen: a social network-based photo recommendation tool supporting reminiscence

In the old days, when photos were still mainly stored in physical albums, people usually had the habit of opening their family albums, viewing the photos and reminiscing memories related to the people in the photos. Such reminiscence had several positive impacts on our emotion, self-esteem as well as our relationship with people around us. As time passed by, with the development of technologies, it's now easier than ever for people to take photos and store and share them online, especially on social networks. However, together with the increasing amount of photos taken and shared online, people do not have time to revisit old photos and reminisce as they used to do with the physical albums. We believe that this is such a waste. Therefore, we designed and developed a tool called NowAndThen to recommend social networks users about the photos taken in the past similar to what they are sharing or viewing, hopefully helping them reviving their old positive memories.

Ubitile: A Finger-Worn I/O Device for Tabletop Vibrotactile Pattern Authoring

While most mobile platforms offer motion sensing as input for creating tactile feedback, it is still hard to design such feedback patterns while the screen becomes larger, e.g. tabletop surfaces. My colleagues and I designed Ubitile, a finger-worn concept offering both motion sensing and vibration feedback for authoring of vibrotactile feedback on tabletops. We suggest the mid-air motion input space made accessible using Ubitile outperforms current GUI-based visual input techniques for designing tactile feedback. Additionally Ubitile offers a hands-free input space for the tactile output. Ubitile integrates both input and output spaces within a single wearable interface, jointly affording spatial authoring/editing and active tactile feedback on- and above- tabletops.

HAV Companions:

HAV Companions is a ecosystem consisting of three applications that aim to enhance user experience in viewing audiovisual content on mobile devices through enriching haptic feedback. The three applications are:

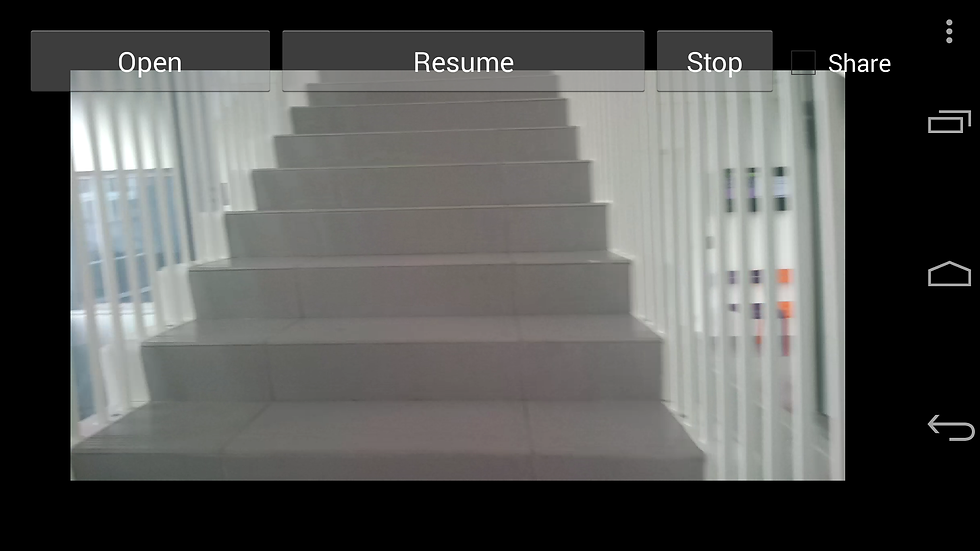

- HAV Recorder: a mobile application that records and synchronizes movement information of the usersassociated with the audiovisual content captured by the built-in camera at the same time. The movement information is gathered through built-in inertial sensors of the device.

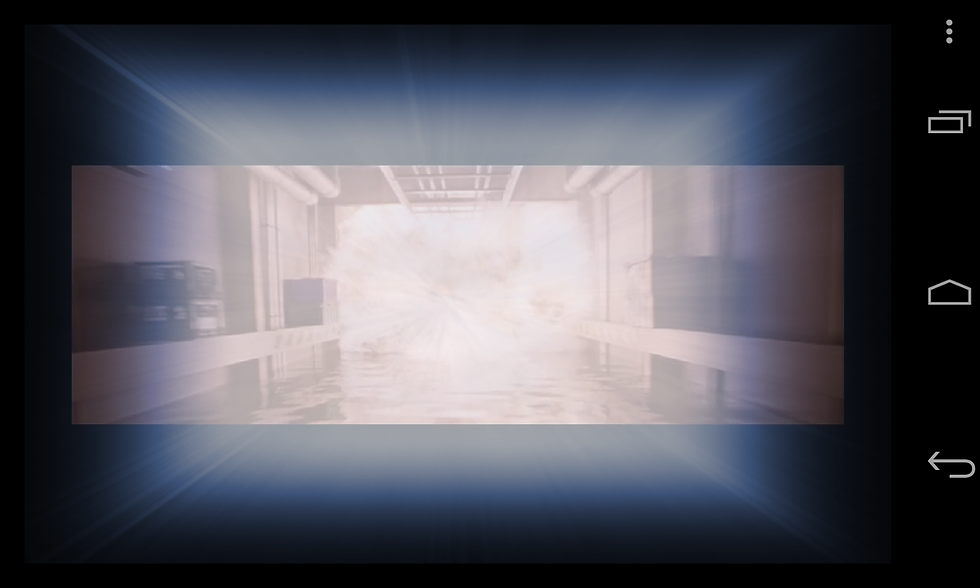

-HAV Player: a mobile application that renders audiovisual contents with real-time vibration and visual effects depending on the provided contents to provide users a richer media experience.

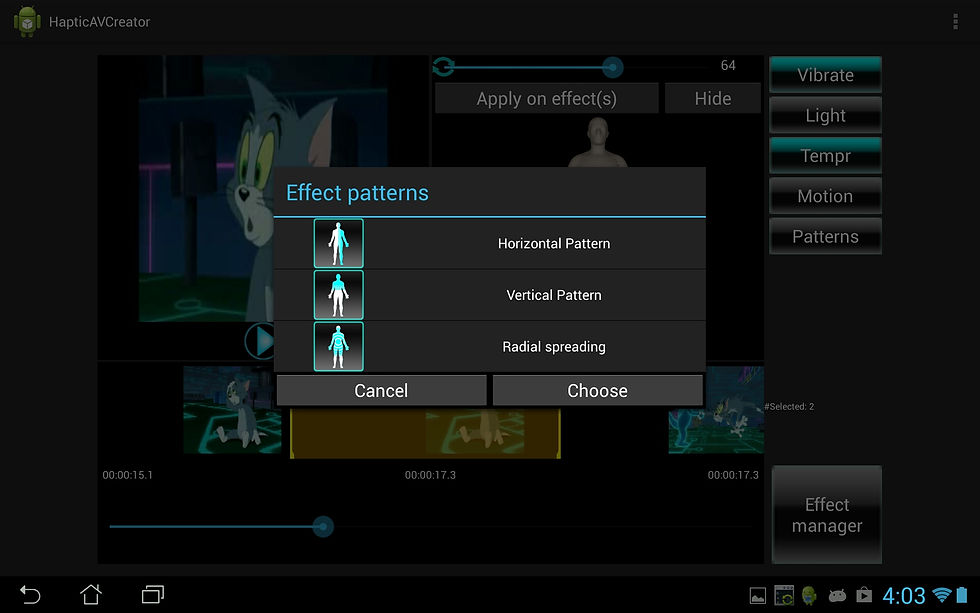

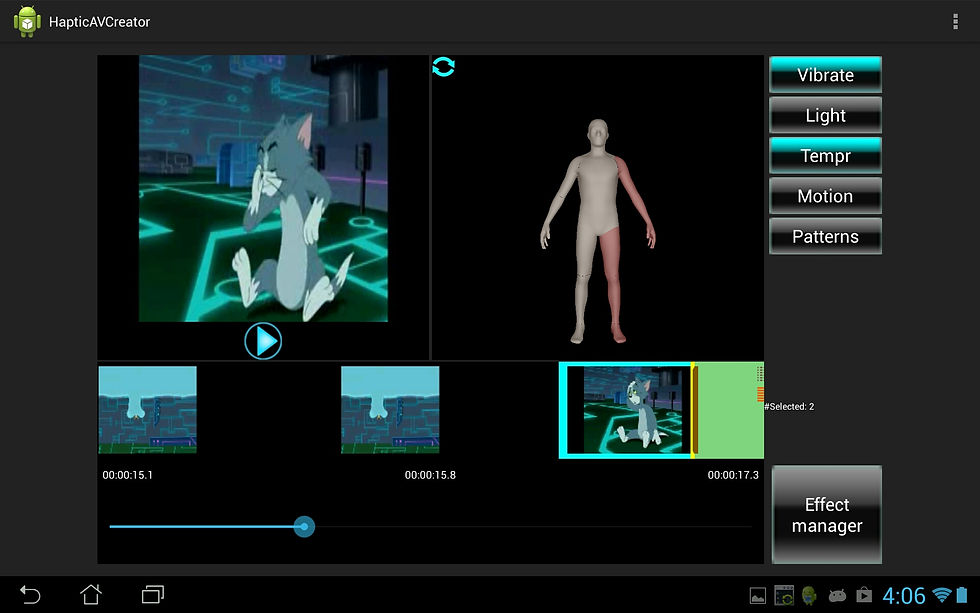

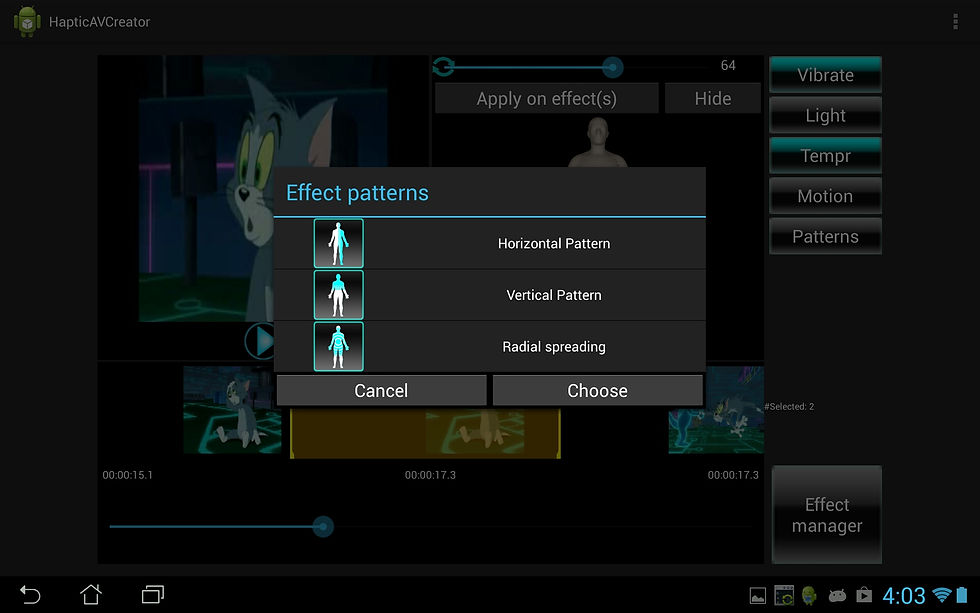

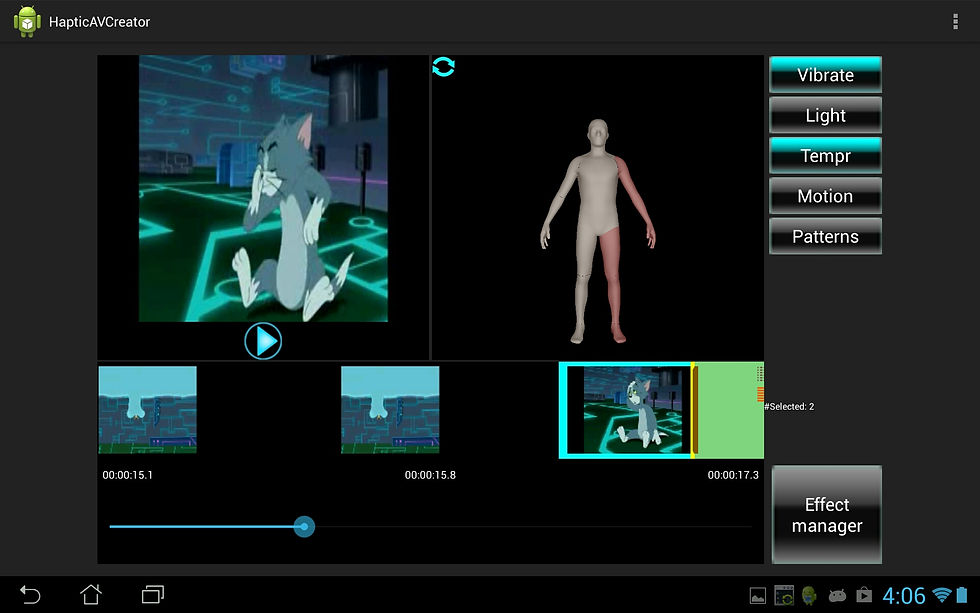

- HAV Creator: a mobile application that allows users to describe different types of haptic feedback to be added to an audiovisual content. The application employs a novel body-centric approach back then ,where users can specify which body part will be impacted by the effect at a moment in the media playback.

Filed patents:

- Method, apparatus and system for synchronizing audiovisual content with inertial measurements (US10249341B2)

- System and method for automatically localizing haptic effect on a body (US20170364143A1)